Cricket Scale, Part 2 - Redis: A Silver Bullet With a Cost

Challenges with redis in action

This is an ongoing series on Cricket Scale — a kind of scale that’s different from normal. It’s sudden, spiky, and event-driven, and here I share how we at Probo built systems to handle it.

When we first brought Redis into the mix, it felt like the silver bullet. Everything got faster — API responses dropped, counters updated instantly, and user experience stayed snappy even during match peaks.

At peak, ~99.7% of requests were served in under 100 ms, with an average response time of just ~15 ms.

Redis gave us the low-latency backbone we needed to keep the platform responsive, even as millions of requests were flowing every minute. It quickly became our default answer for anything that needed to be fast:

Hot caches for APIs

Real-time counters for trades, traders, portfolio

Sorted sets for leaderboards

Sessions, rate limiting, and throttling

And for a while, Redis felt like the silver bullet.

But Redis also came with its own set of challenges, and those challenges became more noticeable as we scaled:

The Rug for Our Tech Debt

Redis didn’t just make our systems faster — it also made our problems less visible. In hindsight, we were guilty of sweeping tech debt under the Redis rug.

Slow database queries? Put them behind Redis.

Unoptimized schema? Hide it under a cache.

Missing indexes? Redis will cover it up.

Poorly thought-out architectures? Add Redis in the middle and call it “scalable.”

It worked — until the rug was pulled away. The moment Redis faltered, all the hidden dirt came spilling out: the database choked, the APIs stalled, and the architectural shortcuts stood exposed.

The lesson for us was clear: Redis is excellent for performance, but it’s not a substitute for good database practices or sound architecture.

We learned this the hard way. Sometimes it was a Redis downtime that exposed all the hidden issues at once. Other times, it was during load testing combined with a bit of chaos testing that showed just how fragile the underlying systems really were.

Not all redis data is equal: Ephemeral vs Permanent

Redis shines when it’s used for ephemeral data: sessions with TTLs, rate limiting, short-lived caches. If Redis goes down, the system can repopulate or tolerate the loss.

But things get tricky when Redis is used for permanent data — like leaderboards without TTLs. Suddenly, Redis isn’t a cache anymore; it’s acting as a database, but without durability guarantees. And when it’s not available, the question becomes less technical and more product-driven:

What should the user see when Redis is down?

Do you try to calculate the leaderboard on the fly? (expensive and slow)

Or do you handle it gracefully? For example, showing a message that “leaderboard is being built” or temporarily hiding the feature.

This is where having a product mindset matters. If Redis is critical to the experience, you need a fallback plan that puts the user first — whether that’s hiding the feature, showing stale data, or communicating clearly that it’s temporarily unavailable.

Redis taught us that system design and product design can’t be separated. At scale, resilience isn’t just about infra choices; it’s also about how you want users to experience failure.

Hot & Heavy: The trouble with Keys at scale

One of the toughest Redis lessons came during a high-stakes India cricket match. Traffic spiked, DAUs surged, and suddenly our app started crashing. The events feed — the most visible part of the experience — stopped working.

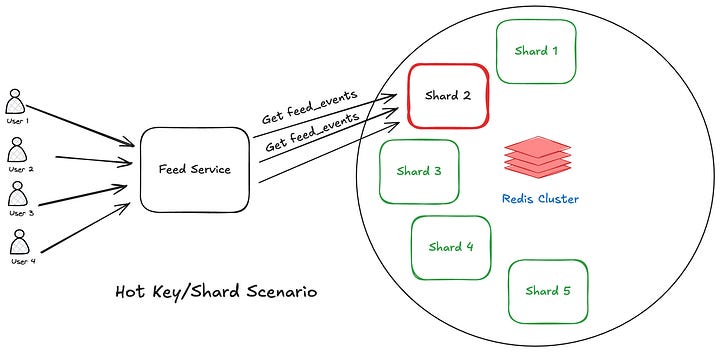

On debugging, we discovered Redis response times had shot up. The culprit: a hot key.

For simplicity, think of it as one prebuilt event feed cached under a single Redis key. (In reality, we had multiple keys with combinations like user segmentation and language, but the point remains the same.) That feed was updated asynchronously through an algorithm, and all requests hit it.

During normal days, this design held up. But when India played — and tens of millions of users came in at once — that one key became a single point of failure, even though Redis was running in a cluster. All the load funneled into a single shard, overwhelming it, while other shards stayed underutilized.

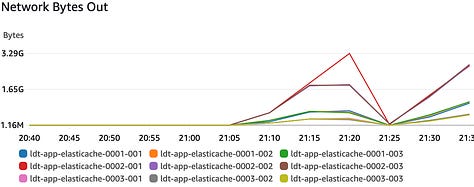

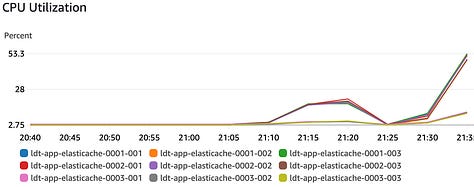

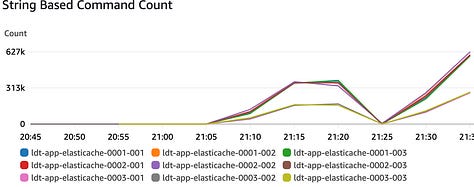

The first bottleneck we hit wasn’t CPU or memory — it was network throughput. That shard simply couldn’t push responses fast enough. We also realized our key size made the situation worse: the feed payload was large, so every request pushed a heavy response over the network.

In subsequent years, hot keys continued to be a recurring theme. This time, they showed up as high CPU utilization and latency spikes on particular shards.

To solve this there can be multiple solutions:

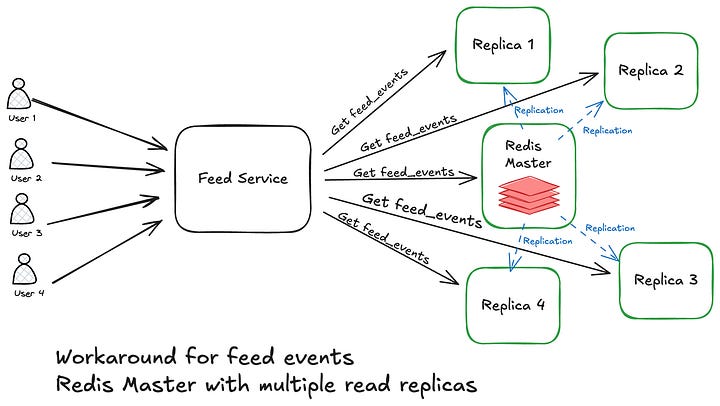

Multiple read replicas: adding replicas and enabling reads from them to spread the load

Distributing load across multiple keys with the same value, to avoid a single point of pressure. This would involve some code changes to your cache get method.

Client-side caching at the Kubernetes pod level, so not every request had to hit Redis. This reduced load on Redis, but brought its own challenges — especially update propagation and cache invalidation across hundreds of pods.

Compression for big keys where payload size couldn’t be reduced at the source. This helped ease network throughput issues, but added CPU overhead for compress/decompress trade-offs.

In our case, since this was a feed, the writes (i.e. feed update logic) weren’t very frequent. That meant replication lag wasn’t a concern, which made the Redis master/replica method a clean fit. We scaled Redis simply by enabling multiple reads across replicas. The Redis client we used (ioredis) even exposed this as a simple boolean flag — making the shift straightforward while leveraging higher network throughput–enabled instances.

Costing and Migrations - The price of speed

Our Redis journey wasn’t only about performance — cost and operations played a big role too.

We started with self-hosted Redis, which worked fine in the early days. But as scale grew, the cracks began to show:

Downtime: our setup couldn’t keep up with rapid traffic spikes, and the feed (again) was the culprit.

Recovery pain: bringing Redis back after failures was slow and messy, especially under load.

Management overhead: handling failovers, patching, and scaling manually took too much engineering time.

The first big shift came when we migrated to AWS ElastiCache. That move gave us reliability and faster operations, but it also introduced new challenges:

To meet higher memory requirements, we had to pick larger instances.

That meant running 8-core or 16-core machines even though Redis itself is single-threaded — so most of those extra cores just sat idle. We were paying for CPU we couldn’t use, simply to get more memory.

This led to inefficient resource utilization, and since managed offerings from AWS aren’t cheap at scale, the cost quickly became significant.

Eventually, we moved again — this time to RedisLabs (now Redis Enterprise). Surprisingly, this turned out to be a cheaper alternative while maintaining the same scale. Our guess is that Redis Enterprise optimizes resource utilization differently — possibly by running multiple Redis processes on the same underlying machine — which reduced waste compared to ElastiCache. Whatever the exact reason, it gave us a better balance between cost efficiency and operational reliability.

The Obvious Good Practices (That Are Easy to Forget)

A lot of Redis pain can be avoided by sticking to the basics. These aren’t fancy tricks — just good hygiene that makes a big difference at scale:

Monitor slowlogs: keep an eye on commands that take longer than expected. They’re often early warnings of a bigger issue.

Choose data types thoughtfully: don’t just reach for strings. Sorted sets, hashes, and bitmaps all have trade-offs in memory and performance.

Set TTLs by default: ephemeral data should expire on its own; otherwise, you risk memory bloat.

Avoid big keys: break payloads into smaller chunks to reduce network and serialization overhead.

Watch replication lag: especially if you’re reading from replicas under heavy load.

Plan for failover: assume Redis will go down at some point and decide what users should see when it does.

At cricket scale, these fundamentals turn from “nice to have” into “must have.”

Final Thoughts

Redis was one of the most valuable tools in our stack. It helped us achieve p99 latencies under 100 ms at scale and kept the platform responsive during peak cricket moments.

At the same time, it highlighted the importance of using the right tool for the right job. Redis worked very well for certain use cases, but it also showed its limits when we stretched it too far.

The takeaway for us: Redis isn’t a silver bullet. It’s extremely effective when applied thoughtfully, but it can create challenges if relied on without careful design.